Predicting Code Comprehension: A Novel Approach to Align Human Gaze with Code using Deep Neural Networks

Understanding code is essential for software developers, forming the basis for maintaining and evolving software systems. The ability to comprehend code efficiently allows developers to make changes with fewer errors. Traditional methods for assessing code quality, like McCabe’s cyclomatic complexity, focus on code metrics but often neglect the human aspect of code comprehension. Recent research indicates that analyzing how developers read and experience code can provide insights into its quality. This study explores a novel approach that leverages deep neural networks to align a developer’s eye gaze with code tokens, predicting code comprehension and perceived difficulty.

Our Research

We have developed a deep neural network that predicts code comprehension by aligning a developer’s eye gaze with code tokens. This approach surpasses traditional methods by integrating human gaze data, offering a more holistic view of code quality.

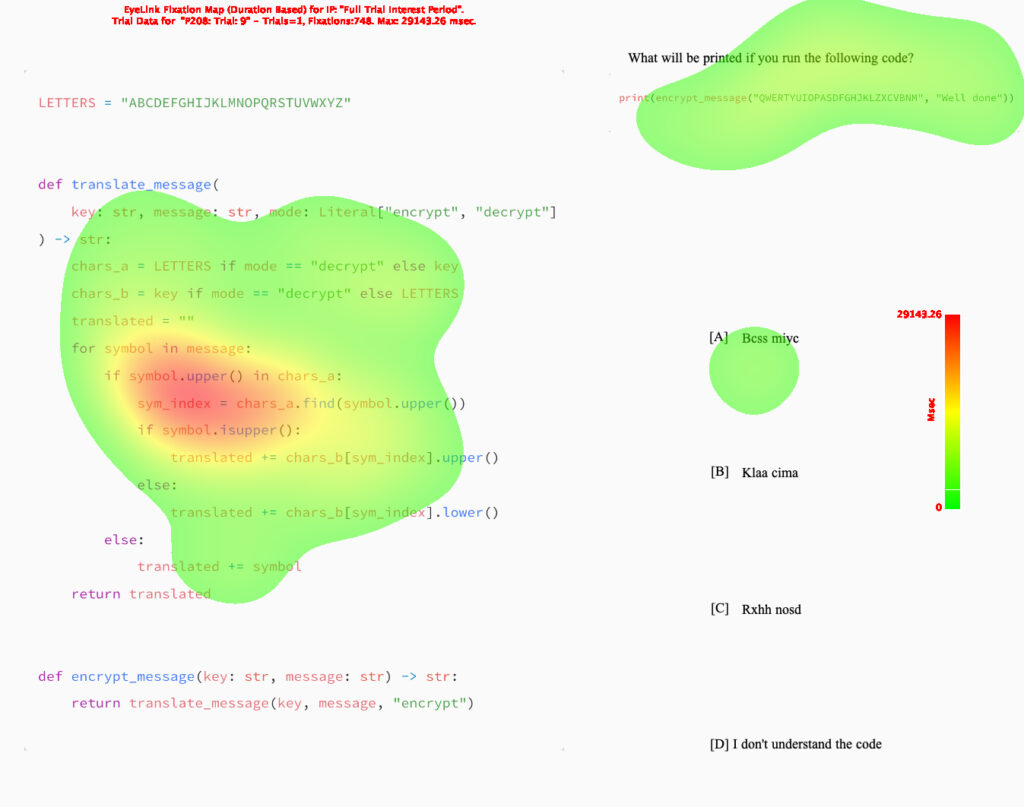

The study involved 27 participants who worked on 16 short code comprehension tasks while their eye movements were tracked using an eye-tracker. The data collected included fine-grained gaze information which was then used to train a neural network.

Key Findings

- Enhanced Prediction Accuracy: The deep neural network model significantly outperformed state-of-the-art reference methods in predicting both code comprehension and perceived difficulty.

- Human Gaze Alignment Adds Value: Models that aligned human gaze with code tokens performed better than those relying solely on code or gaze data.

- Potential of Human-Inclusive Code Quality Measures: The findings suggest applications in developing human-inclusive code evaluation systems, improving tools for developers to understand and refactor code more effectively.

Detailed Insights

Methodology

- Data Collection: Participants completed a set of code comprehension tasks while their eye movements were recorded. The code snippets varied in complexity and were representative of real-world tasks.

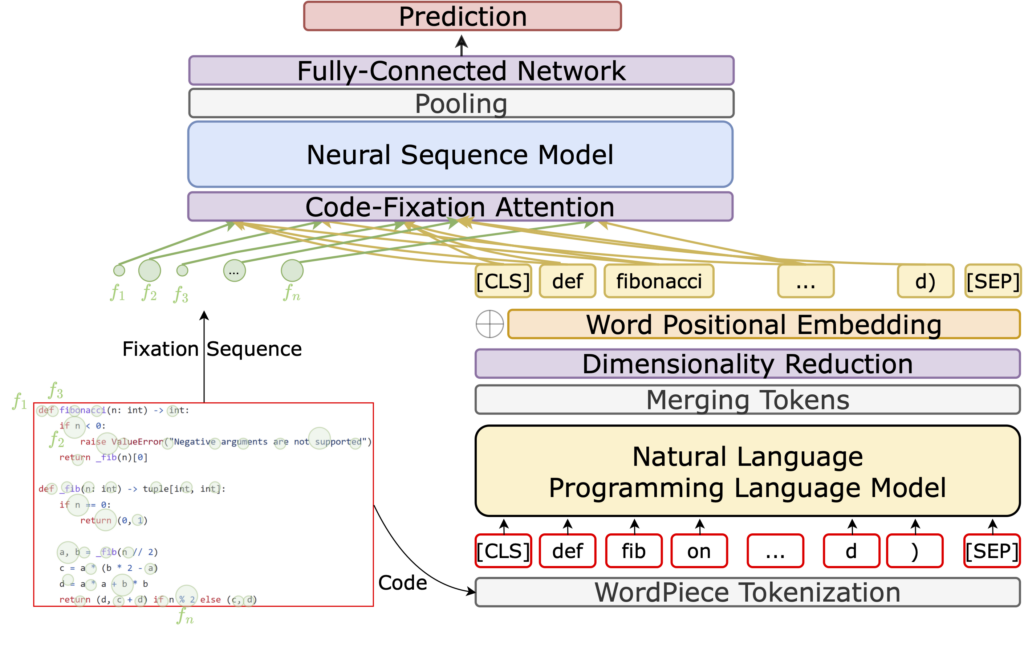

- Neural Network Design: The deep neural sequence model used both the eye-tracking data and the code as input. It employed a natural-language programming-language model to extract contextualized code-token embeddings and align these with gaze data via a code-fixation attention mechanism.

Results

- Performance: The deep neural network achieved an AUC (Area Under the Curve) of 0.746 for predicting code comprehension and 0.739 for predicting perceived difficulty, significantly outperforming previous reference models.

- Human Gaze Integration: Aligning fixations with code tokens proved beneficial for model performance, as models which solely relied on code or solely relied on gaze data performed worse than the multi-modal model.

Conclusion

The results provide evidence for the potential of a more human-inclusive model to determine code quality that is capable of detecting when and where a developer may have difficulty understanding code. Such a model, when integrated into the IDE or the continuous integration pipeline, could assist developers in writing better code in the first place or point out and prioritize segments for refactoring or bug fixing. At the same time, such a model could also be used to support developers by determining when a developer might not comprehend the code they are working on and maybe should take a break or ask a colleague.

Even though the current approach is based on eye movement data collected for a set of code snippets, the findings show that applying it to new code snippets (not trained on) yields good results. Approaches to generate synthetic eye movement data might even allow us to apply the model to arbitrary code snippets in the future without the need for eye-tracking, opening up further opportunities.